Retrieval-Augmented Image Captioning#

In this example, we show how to leverage LLaVa + Replicate for image understanding/captioning and retrieve relevant unstructured text and embedded tables from Tesla 10K file according to the image understanding.

LlaVa can provide image understanding based on user prompt.

We use Unstructured to parse out the tables, and use LlamaIndex recursive retrieval to index/retrieve tables and texts.

We can leverage the image understanding from Step 1 to retrieve relevant information from knowledge base generated by Step 2 (which is indexed by LlamaIndex)

Context for LLaVA: Large Language and Vision Assistant

LLaVA is now supported in llama.cpp with 4-bit / 5-bit quantization support: See here. [Deprecated]

LLaVA 13b is now supported in Replicate: See here.

For LlamaIndex: LlaVa+Replicate enables us to run image understanding locally and combine the multi-modal knowledge with our RAG knowledge base system.

TODO:

Waiting for llama-cpp-python supporting LlaVa model in python wrapper.

So LlamaIndex can leverage LlamaCPP class for serving LlaVa model directly/locally.

Using Replicate serving LLaVa model through LlamaIndex#

Build and Run LLaVa models locally through Llama.cpp (Deprecated)#

cd llama.cpp. Checkout llama.cpp repo for more details.makeDownload Llava models including

ggml-model-*andmmproj-model-*from this Hugging Face repo. Please select one model based on your own local configuration./llavafor checking whether llava is running locally

%pip install llama-index-readers-file

%pip install llama-index-multi-modal-llms-replicate

%load_ext autoreload

% autoreload 2

UsageError: Line magic function `%` not found.

!pip install unstructured

from unstructured.partition.html import partition_html

import pandas as pd

pd.set_option("display.max_rows", None)

pd.set_option("display.max_columns", None)

pd.set_option("display.width", None)

pd.set_option("display.max_colwidth", None)

WARNING: CPU random generator seem to be failing, disabling hardware random number generation

WARNING: RDRND generated: 0xffffffff 0xffffffff 0xffffffff 0xffffffff

Perform Data Extraction from Tesla 10K file#

In these sections we use Unstructured to parse out the table and non-table elements.

Extract Elements#

We use Unstructured to extract table and non-table elements from the 10-K filing.

!wget "https://www.dropbox.com/scl/fi/mlaymdy1ni1ovyeykhhuk/tesla_2021_10k.htm?rlkey=qf9k4zn0ejrbm716j0gg7r802&dl=1" -O tesla_2021_10k.htm

!wget "https://docs.google.com/uc?export=download&id=1THe1qqM61lretr9N3BmINc_NWDvuthYf" -O shanghai.jpg

!wget "https://docs.google.com/uc?export=download&id=1PDVCf_CzLWXNnNoRV8CFgoJxv6U0sHAO" -O tesla_supercharger.jpg

from llama_index.readers.file import FlatReader

from pathlib import Path

reader = FlatReader()

docs_2021 = reader.load_data(Path("tesla_2021_10k.htm"))

from llama_index.core.node_parser import UnstructuredElementNodeParser

node_parser = UnstructuredElementNodeParser()

import os

REPLICATE_API_TOKEN = "..." # Your Relicate API token here

os.environ["REPLICATE_API_TOKEN"] = REPLICATE_API_TOKEN

import openai

OPENAI_API_TOKEN = "sk-..."

openai.api_key = OPENAI_API_TOKEN # add your openai api key here

os.environ["OPENAI_API_KEY"] = OPENAI_API_TOKEN

import os

import pickle

if not os.path.exists("2021_nodes.pkl"):

raw_nodes_2021 = node_parser.get_nodes_from_documents(docs_2021)

pickle.dump(raw_nodes_2021, open("2021_nodes.pkl", "wb"))

else:

raw_nodes_2021 = pickle.load(open("2021_nodes.pkl", "rb"))

nodes_2021, objects_2021 = node_parser.get_nodes_and_objects(raw_nodes_2021)

Setup Composable Retriever#

Now that we’ve extracted tables and their summaries, we can setup a composable retriever in LlamaIndex to query these tables.

Construct Retrievers#

from llama_index.core import VectorStoreIndex

# construct top-level vector index + query engine

vector_index = VectorStoreIndex(nodes=nodes_2021, objects=objects_2021)

query_engine = vector_index.as_query_engine(similarity_top_k=2, verbose=True)

from PIL import Image

import matplotlib.pyplot as plt

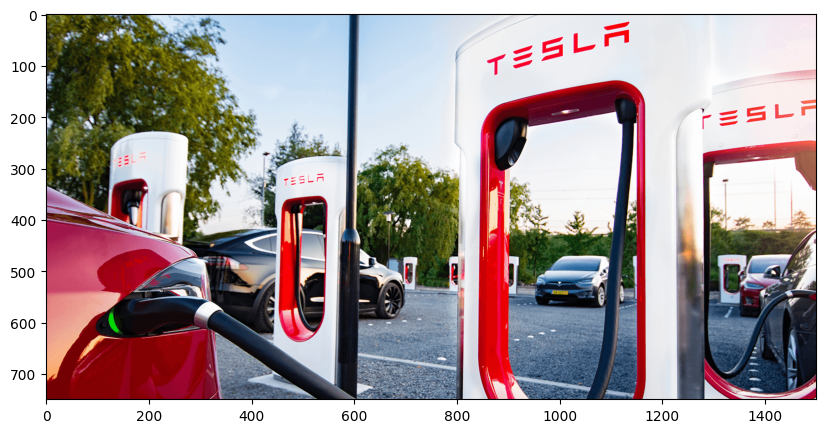

imageUrl = "./tesla_supercharger.jpg"

image = Image.open(imageUrl).convert("RGB")

plt.figure(figsize=(16, 5))

plt.imshow(image)

<matplotlib.image.AxesImage at 0x7f24f9bb8410>

Running LLaVa model using Replicate through LlamaIndex for image understanding#

from llama_index.multi_modal_llms.replicate import ReplicateMultiModal

from llama_index.core.schema import ImageDocument

from llama_index.multi_modal_llms.replicate.base import (

REPLICATE_MULTI_MODAL_LLM_MODELS,

)

multi_modal_llm = ReplicateMultiModal(

model=REPLICATE_MULTI_MODAL_LLM_MODELS["llava-13b"],

max_new_tokens=200,

temperature=0.1,

)

prompt = "what is the main object for tesla in the image?"

llava_response = multi_modal_llm.complete(

prompt=prompt,

image_documents=[ImageDocument(image_path=imageUrl)],

)

Retrieve relevant information from LlamaIndex knowledge base according to LLaVa image understanding#

prompt_template = "please provide relevant information about: "

rag_response = query_engine.query(prompt_template + llava_response.text)

Retrieval entering id_1836_table: TextNode

Retrieving from object TextNode with query please provide relevant information about: The main object for Tesla in the image is a red and white electric car charging station.

Retrieval entering id_431_table: TextNode

Retrieving from object TextNode with query please provide relevant information about: The main object for Tesla in the image is a red and white electric car charging station.

Showing final RAG image caption results from LlamaIndex#

print(str(rag_response))

The main object for Tesla in the image is a red and white electric car charging station.

from PIL import Image

import matplotlib.pyplot as plt

imageUrl = "./shanghai.jpg"

image = Image.open(imageUrl).convert("RGB")

plt.figure(figsize=(16, 5))

plt.imshow(image)

<matplotlib.image.AxesImage at 0x7f24f787aa50>

Retrieve relevant information from LlamaIndex for a new image#

prompt = "which Tesla factory is shown in the image?"

llava_response = multi_modal_llm.complete(

prompt=prompt,

image_documents=[ImageDocument(image_path=imageUrl)],

)

prompt_template = "please provide relevant information about: "

rag_response = query_engine.query(prompt_template + llava_response.text)

Retrieving with query id None: please provide relevant information about: a large Tesla factory with a white roof, located in Shanghai, China. The factory is surrounded by a parking lot filled with numerous cars, including both small and large vehicles. The cars are parked in various positions, some closer to the factory and others further away. The scene gives an impression of a busy and well-organized facility, likely producing electric vehicles for the global market

Retrieved node with id, entering: id_431_table

Retrieving with query id id_431_table: please provide relevant information about: a large Tesla factory with a white roof, located in Shanghai, China. The factory is surrounded by a parking lot filled with numerous cars, including both small and large vehicles. The cars are parked in various positions, some closer to the factory and others further away. The scene gives an impression of a busy and well-organized facility, likely producing electric vehicles for the global market

Retrieving text node: We continue to increase the degree of localized procurement and manufacturing there. Gigafactory Shanghai is representative of our plan to iteratively improve our manufacturing operations as we establish new factories, as we implemented the learnings from our Model 3 and Model Y ramp at the Fremont Factory to commence and ramp our production at Gigafactory Shanghai quickly and cost-effectively.

Other Manufacturing

Generally, we continue to expand production capacity at our existing facilities. We also intend to further increase cost-competitiveness in our significant markets by strategically adding local manufacturing, including at Gigafactory Berlin in Germany and Gigafactory Texas in Austin, Texas, which will begin production in 2022.

Supply Chain

Our products use thousands of purchased parts that are sourced from hundreds of suppliers across the world. We have developed close relationships with vendors of key parts such as battery cells, electronics and complex vehicle assemblies. Certain components purchased from these suppliers are shared or are similar across many product lines, allowing us to take advantage of pricing efficiencies from economies of scale.

As is the case for most automotive companies, most of our procured components and systems are sourced from single suppliers. Where multiple sources are available for certain key components, we work to qualify multiple suppliers for them where it is sensible to do so in order to minimize production risks owing to disruptions in their supply. We also mitigate risk by maintaining safety stock for key parts and assemblies and die banks for components with lengthy procurement lead times.

Our products use various raw materials including aluminum, steel, cobalt, lithium, nickel and copper. Pricing for these materials is governed by market conditions and may fluctuate due to various factors outside of our control, such as supply and demand and market speculation. We strive to execute long-term supply contracts for such materials at competitive pricing when feasible, and we currently believe that we have adequate access to raw materials supplies in order to meet the needs of our operations.

Governmental Programs, Incentives and Regulations

Globally, both the operation of our business by us and the ownership of our products by our customers are impacted by various government programs, incentives and other arrangements. Our business and products are also subject to numerous governmental regulations that vary among jurisdictions.

Programs and Incentives

California Alternative Energy and Advanced Transportation Financing Authority Tax Incentives

We have agreements with the California Alternative Energy and Advanced Transportation Financing Authority that provide multi-year sales tax exclusions on purchases of manufacturing equipment that will be used for specific purposes, including the expansion and ongoing development of electric vehicles and powertrain production in California, thus reducing our cost basis in the related assets in our consolidated financial statements included elsewhere in this Annual Report on Form 10-K.

Gigafactory Nevada—Nevada Tax Incentives

In connection with the construction of Gigafactory Nevada, we entered into agreements with the State of Nevada and Storey County in Nevada that provide abatements for specified taxes, discounts to the base tariff energy rates and transferable tax credits in consideration of capital investment and hiring targets that were met at Gigafactory Nevada. These incentives are available until June 2024 or June 2034, depending on the incentive and primarily offset related costs in our consolidated financial statements included elsewhere in this Annual Report on Form 10-K.

Gigafactory New York—New York State Investment and Lease

We have a lease through the Research Foundation for the State University of New York (the “SUNY Foundation”) with respect to Gigafactory New York. Under the lease and a related research and development agreement, we are continuing to designate further buildouts at the facility. We are required to comply with certain covenants, including hiring and cumulative investment targets. This incentive offsets the related lease costs of the facility in our consolidated financial statements included elsewhere in this Annual Report on Form 10-K.

As we temporarily suspended most of our manufacturing operations at Gigafactory New York pursuant to a New York State executive order issued in March 2020 as a result of the COVID-19 pandemic, we were granted a deferral of our obligation to be compliant with our applicable targets through December 31, 2021 in an amendment memorialized in August 2021. As of December 31, 2021, we are in excess of such targets relating to investments and personnel in the State of New York and Buffalo.

Gigafactory Shanghai—Land Use Rights and Economic Benefits

We have an agreement with the local government of Shanghai for land use rights at Gigafactory Shanghai. Under the terms of the arrangement, we are required to meet a cumulative capital expenditure target and an annual tax revenue target starting at the end of 2023. In addition, the Shanghai government has granted to our Gigafactory Shanghai subsidiary certain incentives to be used in connection with eligible capital investments at Gigafactory Shanghai.

Showing final RAG image caption results from LlamaIndex#

print(rag_response)

The Gigafactory Shanghai in Shanghai, China is a large Tesla factory that produces electric vehicles for the global market. The factory has a white roof and is surrounded by a parking lot filled with numerous cars, including both small and large vehicles. The cars are parked in various positions, some closer to the factory and others further away. This scene gives an impression of a busy and well-organized facility.